AI’s Ancestors

Previous technological disruptions in public health offer insights into AI’s perils and potential.

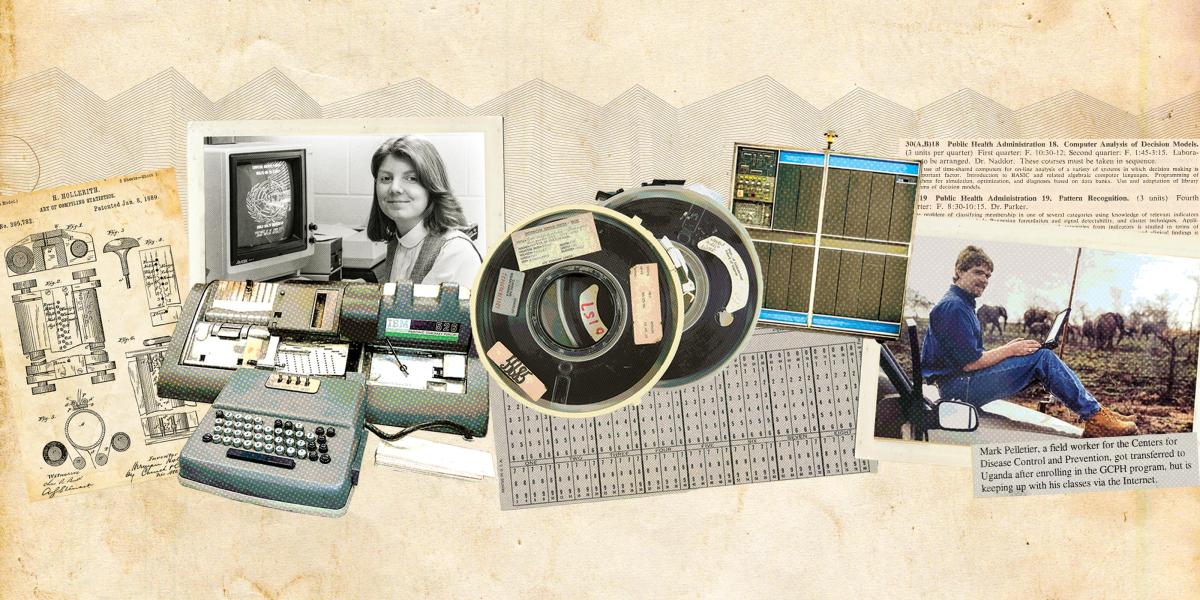

Behold the mighty punch card.

Patented in 1884, Herman Hollerith’s punch card tabulating machine made sorting and counting data by hand obsolete and allowed mere humans to sift through previously inconceivable amounts of information.

Using a keypunch, the operator coded patterns of rectangular holes on a 3-by-7-inch paper card filled with 12 rows and 80 columns. Each hole represented a piece of data, such as age, sex, or occupation. A unique ID number linked each card to an individual. Feeding all the cards from a group of people into a tabulating machine produced a rapid count of all the categories of data from a virtually limitless number of people.

The punch card not only revolutionized data collection more than a century ago; today, it yields insights into how a new technology like AI can disrupt public health. In 1900, punch cards shortened the time to process the U.S. Census from eight years to two years. Hospitals and public health departments quickly adopted them for crunching health statistics. And by the late 1930s, Bloomberg School biostatistician Carroll E. Palmer and Johns Hopkins Hospital director Edwin Crosby used punch cards to modernize the hospital’s antiquated handwritten records system. Each patient’s unique hospital ID number was attached to their personal and clinical records, which opened a portal into the history, course of disease, and treatment of every patient. It enabled researchers to travel backward and forward in time—to calculate, for example, how many men and women admitted in the last five years had died of heart attacks, and how many more could be expected to meet the same fate in the next five.

Linking individuals and their associated data via punch cards became an invaluable tool for epidemiologic and demographic research. This new capacity to see patterns in aggregated data made it possible to extract meaning from ever-larger realms of information. For example, between 1922 and 1947, School faculty and Baltimore City Health Department staff conducted five demographic and health surveys among the 110,000 residents of the Eastern Health District (EHD). Biostatisticians Halbert Dunn and Lowell J. Reed developed a painstaking coding system to record every household’s births, deaths, age, race, and family size, then linked this information to each member’s clinical data. In a 1946 American Journal of Public Health article, Dunn outlined his vision of a national version of the EHD system and introduced the concept of record linkage. A unified database for identity verification that linked each citizen’s birth, marriage, divorce, and death records would be “an invaluable adjunct to the administration of health and welfare organizations,” he wrote. As chief of the U.S. National Office of Vital Statistics, Dunn’s widely cited work in biostatistics, demography, and information science led to the widespread adoption of record linkage for computer information systems, and later e-commerce and even AI.

In the late 1940s, Reed chaired the U.S. and WHO committees charged with creating a single international system for classifying diseases, injuries, and causes of death. Drawing on the EHD framework, Reed shepherded the creation of the International Classification of Diseases, the cornerstone of diagnosis and data analysis for epidemiology, health management, and clinical medicine.

Another lesson from history: Every technological revolution has its unintended consequences.

School researchers continued to use record linkage to produce striking findings, such as the role of premature and low-weight births in causing developmental disabilities. Epidemiologists Abraham Lilienfeld and George Comstock, both punch card kings, pioneered ways to efficiently connect family history, lifestyle, environment, and chronic disease data from large study populations to reveal risk factors for chronic disease. And biostatistician Alan M. Gittelsohn showed how expanded computerized matching of vital statistics with medical records could prevent horrific consequences. He argued that if health officials, for example, had monitored changes in the incidence of birth defects and correlated them with maternal records, the thalidomide tragedy in the late 1950s and early 1960s, which caused at least 10,000 babies to be born missing limbs, could have been mitigated.

Another lesson from history: Every technological revolution has its unintended consequences. In the 1960s, for example, the high-tech glamour of radiological science motivated School leaders to build the North Wing of the Wolfe Street Building. Generous NIH grants funded the new department’s enormous computing and electronics labs (which generated such intense heat that they required the installation of air conditioning at the School). These infrastructure investments ushered in modern computing (and climate control) throughout the School, and the resulting advances in radiobiology and radiochemistry fundamentally shaped subsequent work on biomarkers and the genomics of environmental health. Yet once scientific interest in the health effects of radiation dimmed, the Radiological Science Department faltered and was absorbed into the Department of Environmental Health Sciences in the mid-1980s.

To a certain extent, investments in new technologies like AI will always be a gamble. In the mid-1990s, the School adopted what were then highly experimental technologies and teaching methods to support online learning, including broadband internet and digital multimedia capability to connect classrooms with remote students and field sites. This early commitment to bringing high-quality public health education to students who could not live in Baltimore dramatically expanded the School’s reach—an investment that has paid for itself many times over.

The future of AI is unclear at best. AI is both a technological inflection point and a recombination of powerful existing forces (some reaching back to the punch card). As with all technology since fire, it’s futile to predict AI’s uses and consequences—and essential to build in the right safeguards.

-

1919

“Computer” appears in the School’s first catalog and describes a faculty member who conducts biometric research.

-

1922

School begins using punch cards to link data from 100,000+ Baltimore residents across 5 surveys over 25 years.

-

1962

The Department of Radiological Science rents an IBM 1401 mainframe for $50,000 per year.

-

1966

The Department of Biostatistics offers the first computing course, Programming for Digital Computers.

-

1977

Abe Lilienfeld leads data coding and analysis to determine microwaves aimed at the U.S. embassy in Moscow did not harm 7,000+ staff and family members.

-

1983

Dean D.A. Henderson establishes the Hygiene Computing Center with JHU’s first individual student terminals.

-

1997

Marie Diener-West and Sukon Kanchanaraksa develop the School's first online distance education course, Quantitative Methods.

-

1999

The School, under Dean Alfred Sommer, becomes the first school of public health and first JHU division to offer broadband and wireless internet connections.

-

2012

Dean Michael J. Klag brokers an agreement between the School and Coursera to provide Massive Open Online Courses (MOOCs).